On Being Officially Classed as a Robot

Reddit has decided that I am a robot, and robots can't play on Reddit, so my account has been banned. That has consequences for this site, because I had various links to Reddit posts scattered throughout my blog. So to fix those links, I had to dust off my Nikola-based blog machinery and update the links to use archive.org versions instead. While I was at it, I figured I might as well write a post about the experience.

The tl;dr; version is something we all should know: when we pay nothing to participate in a service run by a major tech company that achieves its scale mostly through automation, it's not only the case that we are the product being sold to advertisers, but also that we lack any meaningful power in the relationship. For as long as things keep working, everything is fine, but at any moment, you can find yourself left wondering what just happened as an online identity winks out of existence without warning or recourse. Thinking the posts you made on Reddit are something permanent you can link to is a mistake when the company feels and has no obligation to you. In the rest of this post, I'll say a little more about what I've been up to lately, and how I came to be classified as a bot by Reddit.

Melissa's One Trick

Before we dive in, a bit of background. If there's one thing I'm known for, one classic Melissa move, it's calling bullshit on things. In fact, if we look at my record, PCG can be seen as calling bullshit on the idea that linear congruential generators are crap and should never be the basis of a random-number generation system, and before that, The Genuine Sieve of Eratosthenes tore apart a popular example of a purported lazy functional prime sieve. And it's not just my academic writing: I'm frequently the only (or first) person to call bullshit in faculty meetings at my institution; a favorite example from a few years ago was when our college CIO had the faculty in rapt attention purportedly talking about what Computing and Information Services had done all year, and I observed that he'd spent his entire time talking about his org chart and nothing they'd actually done, breaking the spell.

I'm not trying to be a jerk, or to be a contrarian just for the sake of it, but if there's something that seems wrong or misleading, something important that other people seem to be missing, I feel compelled to speak up. So, with that approach in mind, let's look at what I've been up to lately.

Melissa's Recent Activities

The last time I updated this blog was in 2018 (!!), so a good bit of time has passed. One thing that certainly slowed things down on the posting front was becoming department chair of Mudd's Computer Science department, which occupied a lot of my attention. When the COVID-19 pandemic hit in early 2020, I stepped down from that role, but during lockdown I spent more time focused on retro-computing projects than random-number generation, although I did and do have some as yet unpublished work in that area, too. I also ended up building my own learning management system, which is used for the third course in my department's intro sequence. It provides interactive online lessons with progress tracking and integrated questions for engagement; more recently I added AI-driven responses for free-form text answers. Students really like it, and as an instructor, it's a delight that it's driven by text-based sources, not a web portal from hell and requires almost zero infrastructure to run. Although it's used for multiple courses at Mudd, and I even bought a domain name for it, it's not quite ready for a broader user base yet, so I won't link to it here. Yet.

And lastly, I've been very engaged with the recent advances in AI systems, and LLMs in particular. Back in May, 2020 (when GPT-2 was the state of the art), I installed ParlAI from Facebook Research, and got to see what a transformer-based chatbot could do. Here's what I told a colleague at the time:

I found the conversation surprising in various ways. There are various imperfections, and it's very resource-hungry and slow, but we are at a point where we have a machine you can have a somewhat meaningful conversation with; albeit a machine that few would argue has the kind of intentionality that would mark conscious entities in the normal sense of the word.

I was intrigued by the possibilities, but I mostly set aside investigating LLMs until June 2022 when the media got excited by Blake Lemoine's claims about LaMDA being possibly sentient. Lemoine's claims were widely ridiculed in the media, and we can see from my own 2020 experiments that I hadn't been aligned with Lemoine's views. But when I'd expressed myself back then, I'd done so cautiously, hedging with “few would argue” and “normal sense of the word”; in contrast, 2022's media coverage was far more sweeping in its dismissive tone. And that, of course, set off my bullshit detector.

So I returned to looking at LLMs and tried to both understand what led Lemoine to make the claims he did, but also to confirm how wrongheaded and lacking in clear understanding much of the media coverage was. It's one thing to say that we had little evidence that LaMDA had some kind of inner life like our own, but quite another to say that there can't be anything like an inner life for a system like LaMDA because it's (merely) implemented using a neural network that fundamentally operates through matrix multiplication. From my perspective, that statement is exactly equivalent to claiming that I can't be conscious because I'm made of meat and depend on electrochemistry to function.

From there, I've found myself looking at the various bogus arguments people make about AI and thinking about ways to refute them. When I got a turn as director for our colloquium series, I was able to do some extra things in the service of that goal; I was able to invite both Daniel Dennett and Eric Schwitzgebel to speak at Mudd, with Dennett's possibly being one of the last talks he gave before he died. Both of these philosophers have written extensively about consciousness, and have argued that we should be careful about making claims about human specialness.

One frustration I have with many of the uncritically repeated claims about human specialness and (potential) AI capabilities is that most philosophers don't know much computer science, and most computer scientists don't know much philosophy of mind, so there are a lot of specious claims that get made without anyone really calling them out.

Although my academic qualifications are in computer science, I've long been interested in psychology and philosophy of mind (I even learned how to be a hypnotist in my teens and had fun hypnotizing friends), so I felt like I had a bit more perspective on these issues than most computer scientists. Still, I wasn't sure how seriously to pursue my own writings on these topics, but in the end I decided that at the very least I could have a website and blog to post my ruminations on these topics that had previously only been shared with a small group of colleagues and friends, creating reflections.team-us.org.

Melissa Writes Identity Horror

People don't always think rationally or clearly, so oftentimes a carefully thought out rational argument isn't enough to change minds. As a result, I often find myself using stories as a way to help people question ideas that they think of as being “common sense”. But I'd also been writing stories for the imaginative joy of it long before I realized I could use them for that purpose. Many of my stories could be seen as belonging to the identity horror genre, where the characters' sense of who they are is challenged in some way. Much of this work would be very much at home in an episode of Black Mirror; Apple's Severance TV series could also be seen as identity horror, too.

Honestly, I think one aspect of what drives my fascination with stories about loss of self is that I've witnessed it happen with actual people, with dementia robbing first my grandmother and then my mother of what made them who they were. Genetics mean that a similar dissolution likely awaits me at some point in the future, so I may as well explore the topic through fiction before I get to experience it myself but not be able to appreciate it.

My stories aren't all about loss of identity, of course; some are about self-discovery, and some are just about inhabiting a mind or personality different from one's own. It's not a huge surprise that as a computer scientist and avid reader of science fiction, stories about AI interest me and come out in some of my writing, either by telling shoe-on-the-other-foot stories that treat humans the way we treat AI, or by telling stories an AI perspective.

Melissa Accidentally Writes a Novel

So, this is the background in which I ended up writing something longer than a short story or novella. Call me nutty if you like, but this time I had to call bullshit on Ranma ½, a manga series by Rumiko Takahashi that had already been made into an anime series, but that Netflix decided to remake as a new anime in 2024. The full story of why I did so is given in this post over on my new blog, but the tl;dr; is that my spouse ended up joining in on the effort, we wrote something together, and it ended up being a full-length novel of about 125,000 words. On the one hand, it's pretty cool that I wrote a novel, at least partly to prove a point about identity and empathy, and that it turned out pretty well, but on the other hand, my first and possibly only novel being a work of fan fiction is a bit embarrassing. Oh, well.

When you write fan fiction, you can't sell it commercially, so I made a site for it at phoenix.team-us.org, where you can read it for free online or download it as an ePub file. Although you don't actually need to know anything about Ranma ½ to read it, it probably won't be everyone's cup of tea. At some point, I might also put it on Archive of Our Own, too, but I haven't gotten around to that yet.

Melissa Gets Classed as a Bot and Banned from Reddit

We got the book finished in time for Christmas, and I decided it would be neat to do a couple of posts on Reddit saying that there was a free book people could read if they wanted to; kind of a Christmas present. I didn't want to go over the top, so I picked just two subreddits to post in, r/ranma (because it's Ranma ½, duh) and r/lgbt (because there were themes in the book that would likely appeal to that audience).

I actually have two accounts on Reddit. My main one is kept at arm’s length from my real identity; I wouldn't really mind if someone made the connection, but possibly my academic colleagues would be surprised how much time I've wasted posting on /r/CarvedFromSoap and /r/IndustrialShredders over the years (damn it, those were supposed to be made-up silly subreddits, but there actually is a subreddit for industrial shredders!). My other one is, or rather, was, my professional account, connected to my real name (/u/ProfONeill), which I'd used to post about PCG and also my solutions to Advent of Code challenges over the years.

Since I was being open about writing the book, I made the two posts about the book using that account. Alas, there were a couple of issues:

- That account hadn't been used in quite a while.

- I was posting to subreddits that that account hadn't really participated in before.

- I was posting about a domain that was basically unheard of.

These factors, of course, triggered Reddit's bot-detection algorithms, which are designed to identify and block spam accounts, many of which come from hijacked existing accounts. So my account was shadowbanned.

I didn't realize that until a moderator from the LGBT subreddit let me know I'd been classified as a bot and banned but that there was nothing they could do as it was a site-wide issue. I tried to appeal, but the response just told me that I'd violated their rules, should read them more closely, and that their decision was final. No indication of what rule or rules I'd allegedly violated and no way to respond or ask for more information. There was no indication that a human was involved in the appeal process at all.

Irony Is Ironic

I've been writing about the destruction of identity for some time, and there is definitely some irony that when I tried to share a story about someone finding their identity challenged and ultimately finding themselves, my own online identity was summarily erased by an automated system with no recourse.

And, having written stories about people discovering they were robots, to be classified as a bot myself is also pretty ironic.

So, how do I deal with that? While I'd love it if somehow an actual human being at Reddit woke up to the idea that destroying someone's online identity without warning or recourse is a bad idea in general, or at least in my specific case and reinstated my account, that feels like a long shot. So, maybe I need to just live with the loss. That's a bummer, but it's good to have some perspective, and compared to actual harms people suffer in the physical world, it's pretty minor. I've already compensated for the damage fixing the links here.

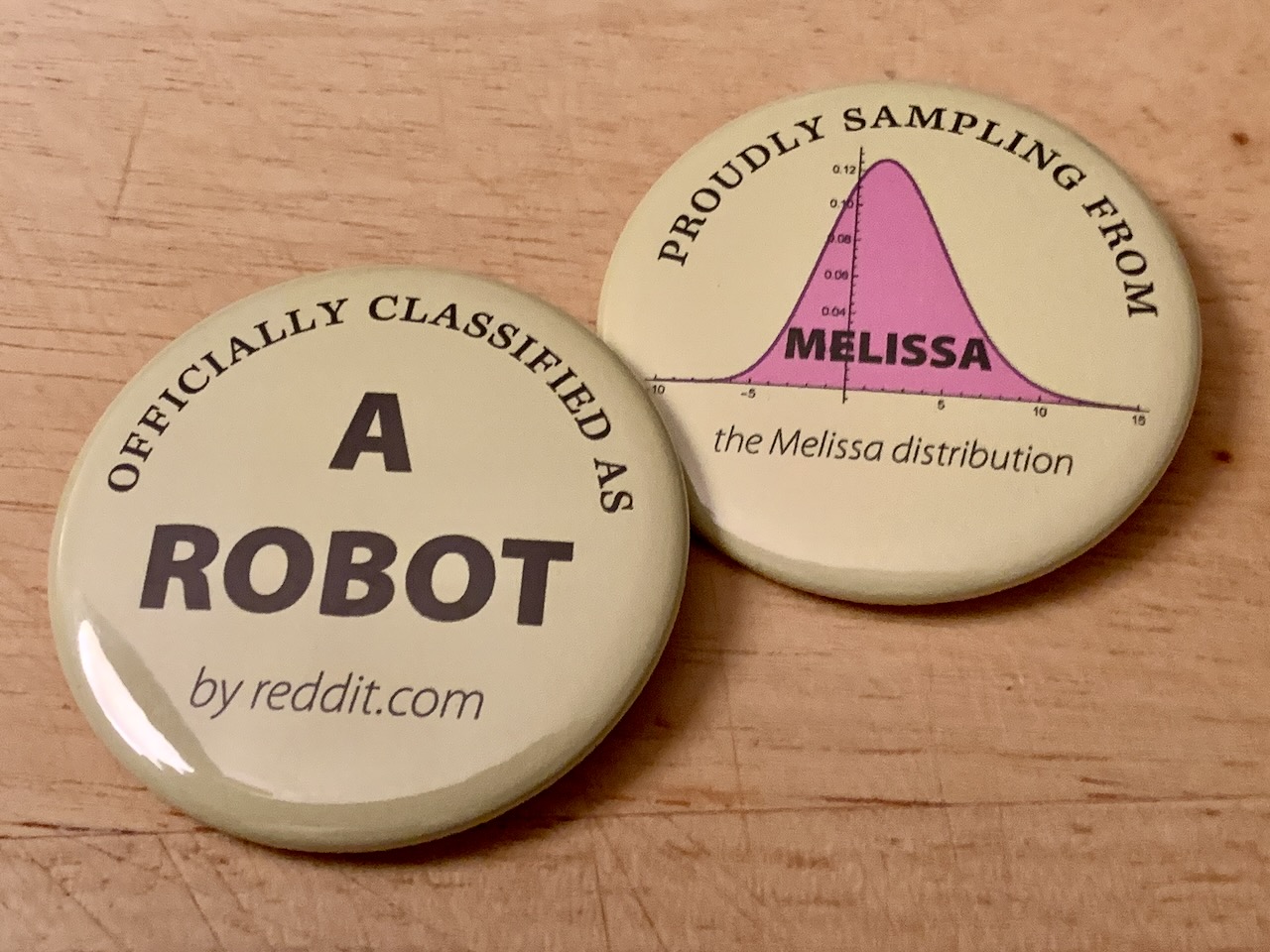

But I might as well try to have a bit of fun with it. I'd always wanted a button-making machine, and so I got myself a late Christmas present from American Button Machines to allow me to express myself in the analogue world even if I've been a bit silenced in the digital one. So now I have two buttons proclaiming my bot-ness, which you can see in the designs below:

And here they are as actually made 2-inch buttons:

Maybe I've been on team robot all along.